Improving mathematical information retrieval

Postdoctoral researcher Besat Kassaie, Dr. Andrew Kane and Distinguished Professor Emeritus Frank Tompa have won a Best Paper Award at DocEng’25, the 25th ACM Symposium on Document Engineering. Their paper, Exploiting Query Reformulation and Reciprocal Rank Fusion in Math-Aware Search Engines, introduces new methods that improve how search engines handle mathematical queries.

“Congratulations to Besat, Andrew and Frank,” said Raouf Boutaba, University Professor and Director of the Cheriton School of Computer Science. “Their work demonstrates how combining large language models for query reformulation along with reciprocal rank fusion can substantially improve mathematical information retrieval, achieving far better performance than relying on the original query alone.”

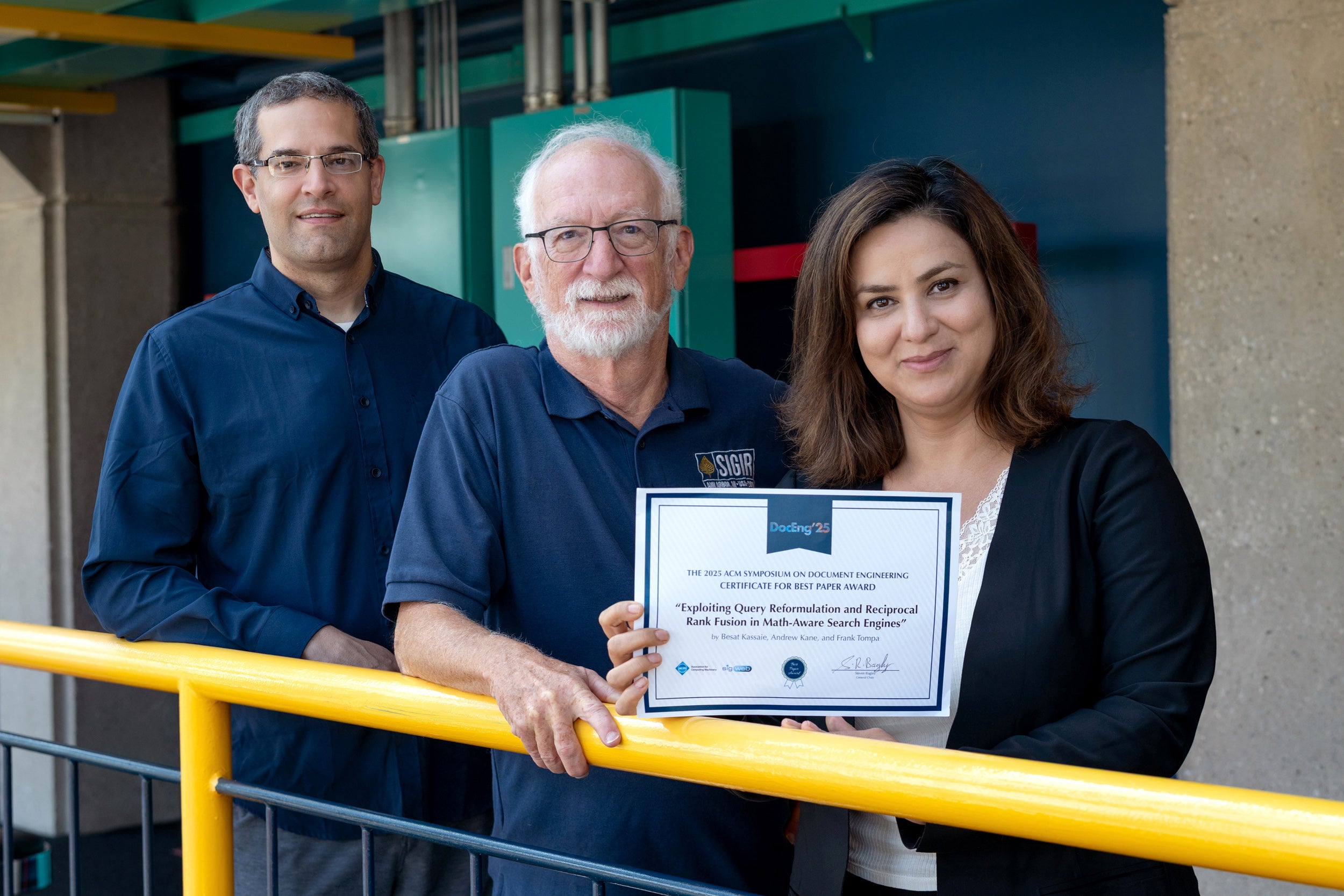

L to R: Andrew Kane, Frank Tompa, Besat Kassaie

Besat Kassaie is a postdoctoral researcher working with Professor Renée J. Miller. Her research interests focus primarily on data systems. She is particularly interested in challenges related to data intelligence, information extraction, unstructured data quality, and information retrieval. Her PhD thesis, supervised by Professor Tompa, introduced and addressed the problem of updatability in document databases.

Andrew Kane received his PhD in Computer Science in 2014 under the supervision of Professor Tompa. His research spans search engine space–time performance, mathematical search, the Janus search engine for locating Manipulus Florum quotation variants in digital documents, disk write latency, and the design and implementation of distributed systems.

Frank Tompa is a Distinguished Professor Emeritus. His teaching and research interests span the fields of data structures and databases, particularly the design of text management systems suitable for maintaining large reference texts — including the Oxford English Dictionary — as well as, large, heterogeneous text collections.

About this award-winning research

This research addresses the challenge of retrieving answers to questions in mathematical community question answering platforms such as Mathematics Stack Exchange. Unlike conventional text-based queries, mathematical queries often include extensive context, detailed elaborations and distracting asides, all of which make it difficult for traditional search systems to match queries with relevant answers. Traditional retrieval techniques are efficient, but they rely heavily on exact word matching and usually lack contextual understanding.

To overcome these limitations, the research team investigated how large language models (LLMs) can assist in reformulating queries by selecting, augmenting, or re-weighting query terms. They focused on the Answer Retrieval for Questions on Math benchmarks, developed as part of the Conference and Labs of the Evaluation Forum, to evaluate their approach. They investigated several query reformulation strategies, including using LLM responses to refine search terms, concatenating LLM responses with the original query, and re-weighting terms based on their presence or absence in the LLM output. The study also employed reciprocal rank fusion to combine the strengths of multiple reformulation methods into a single ranking strategy.

Experimental results showed that combining strategies significantly outperformed using the original question alone. In two experiments with real-world mathematical questions, combining four strategies for term selection, term augmentation, and term re-weighting improved nDCG’@1000 by 5%, MAP’@1000 by 7%, and P’@10 by more than 9% — three evaluation metrics used in information retrieval — over using the question as given.

By showing how LLM-assisted query reformulation and score fusion improve the effectiveness of math-aware search engines, the study provides new tools to explore repositories of mathematical knowledge.

While exploratory, the findings suggest promising avenues for future work. The results provide insight into the effects of various ways to incorporate the responses from LLMs as part of query reformulation. As next steps, the researchers suggest investigating whether these results also apply more broadly to other mathematical corpora and query sets, as well as investigating how to determine which properties of a query leads to one rewriting strategy outperforming the others. If these approaches are possible, a hybrid, per-query strategy could lead to even greater improvements in mathematical information retrieval.

Excellence building on excellence: This award-winning paper builds on Choosing Math Features for BM25 Ranking with Tangent-L, which received the Best Paper Award at DocEng’18, the 18th ACM Symposium on Document Engineering, as well as on Dowsing for Math Answers, a Best of Labs paper presented at CLEF 2021, the 12th Conference and Labs of the Evaluation Forum.

To learn more about the research on which this article is based, please see Besat Kassaie, Andrew Kane, Frank Wm. Tompa. Exploiting Query Reformulation and Reciprocal Rank Fusion in Math-Aware Search Engines. In ACM Symposium on Document Engineering 2025 (DocEng ’25), September 2–5, 2025, Nottingham, United Kingdom.