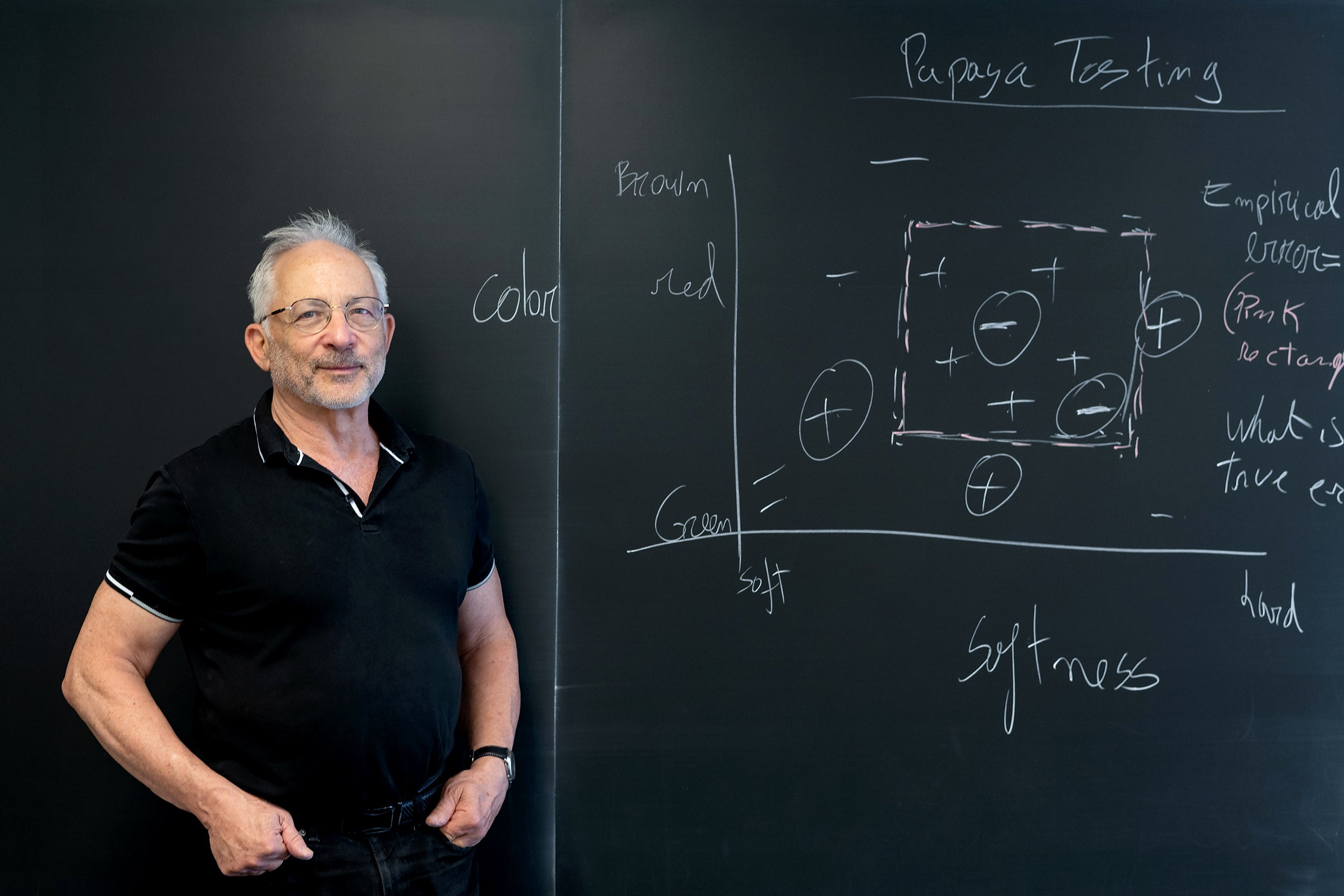

Professor Shai Ben-David has been named a 2025 Fellow of the Royal Society of Canada, the highest national recognition for researchers in the arts, humanities, social sciences and sciences. He is among 102 individuals across Canada elected this year for their exceptional scholarly, scientific and artistic achievements.

“Congratulations to Shai on this well-deserved honour,” said Raouf Boutaba, University Professor and Director of the Cheriton School of Computer Science. “Being named a Fellow of the Royal Society of Canada is a recognition of his many contributions to the theoretical foundations of machine learning. Shai’s research spans an impressive breadth, from machine learning theory and computational complexity to logic in computer science, mathematical logic and set theory.”

A prolific researcher, Professor Ben-David has authored or co-authored more than 170 publications, which have been cited more than 28,000 times with an h-index of 54 as of August 2025, according to Google Scholar. He was named a Fellow of the Association for Computing Machinery in 2024, appointed a University Research Chair in 2020, and a Canada CIFAR AI Chair in 2019. Work with his students has been recognized with five best paper awards — at the International Conference on Acoustics, Speech, and Signal Processing in 2005, the Conference on Learning Theory in 2006 and 2011, NeurIPS in 2018, and the Conference on Algorithmic Learning Theory in 2023.

With Shai Shalev-Shwartz, he co-authored Understanding Machine Learning: From Theory to Algorithms, a widely used textbook in machine learning theory courses. Since its publication in 2014, the book has been cited nearly 9,500 times. His public lectures on machine learning and on logic have become academic staples, viewed collectively more than 400,000 times on YouTube.

Foundational and influential research in machine learning

Professor Ben-David began researching machine learning in the early 1990s, well before the field’s current prominence. His early research identified fundamental theoretical challenges that would later become central to the discipline.

Domain adaptation and transfer learning

A common assumption in machine learning is that training data reflects the environment in which a model will be used. In real-world applications, however, such assumptions are unlikely to hold. Professor Ben-David pioneered the theoretical study of domain adaptation, which addresses discrepancies between training and deployment environments. His work presented at NIPS 2006 with John Blitzer, Koby Crammer and Fernando Pereira, and later expanded in a paper in Machine Learning in 2010, to this day serves as the foundation for much follow-up work.

Change-detection in data streams

Recognizing that data distributions can change over time, Professor Ben-David was among the first to develop tools to detect and analyze such changes, starting with his work on Learning Changing Concepts by Exploiting the Structure of Change with Peter Bartlett and Sanjeev Kulkarni, research presented at COLT 1996. This was followed by his pioneering work, Detection Change in Data Streams, with Daniel Kifer and Johannes Gehrke presented at VLDB 2004 and Nonparametric Change Detection in 2D Random Sensor Field with Ting He and Lang Tong, the latter receiving a best paper award at ICASP 2005.

Computational hardness of machine learning

Professor Ben-David established some of the first computational hardness of learning results. These include Hardness Results for Neural Network Approximation Problems with Peter Bartlett presented at EuroCOLT 1999 and NP-hardness of learning for natural concept classes in On the Difficulty of Approximately Maximizing Agreements, research with Nadav Eiron and Philip Long, work originally presented at COLT 2000.

Multi-class and real valued learning

Professor Ben-David played a key role in expanding the understanding of machine learnability beyond binary classification. His influential work began with Characterizations of Learnability for Classes of {0,..., n}-Valued Functions with Nicolò Cesa-Bianchi and Phil Long presented at COLT 1992. This was followed by Scale-sensitive Dimensions, Uniform Convergence and Learnability with Noga Alon, Nicolò Cesa-Bianchi and David Haussler published in the Journal of the ACM in 1997, and by Multiclass Learnability and the ERM Principle with Amit Daniely, Sivan Sabato and Shai Shalev-Shwartz, a best student paper at COLT 2011 and work published subsequently in the Journal of Machine Learning Research in 2015. Together, these works laid the theoretical foundation for the learnability of multi-class and real-valued functions.

Methodical analysis of clustering paradigms

Clustering is a core task in data analysis. Professor Ben-David made foundational contributions to developing a general theory of clustering, as opposed to investigating particular algorithms or assuming narrowly defined data-generating processes. His NIPS 2008 paper with Margareta Ackerman initiated a long line of research, developing a theory for taxonomizing clustering methods according to their input–output behaviours. This work highlights the crucial importance of carefully selecting the appropriate clustering method for a given task. His follow-up papers introduced tools for that important and under-investigated issue.

Independence of set theory results in computer science

Professor Ben-David is also a pioneer in research on the impact of axiomatic mathematics on computer science. His 1991 paper with Shai Halevi, On the Independence of P versus NP, proved equivalence between the independence from a strong axiomatic theory of P vs NP and having almost-polynomial-time SAT solvers.

Solving long-standing open problems

Beyond identifying new research directions, Professor Ben-David has also resolved several long-standing open problems in machine learning. His paper Settling the Sample Complexity for Learning Mixtures of Gaussians with Hassan Ashtiani, Nick Harvey, Christopher Liaw, Abbas Mehrabian and Yaniv Plan resolved the longstanding problem of the amount of training sample data necessary and sufficient to learn a mixture of high-dimensional Gaussian distributions.

His recent paper, Inherent Limitations of Dimensions for Characterizing Learnability of Distribution Classes, with Tosca Lechner presented at COLT 2024 resolved some 30-year open problems on the characterization of learnability of families of probability distributions among other setups of learning.

In addition to these fundamental contributions, Professor Ben-David has also shown some unexpected limitations of popular algorithmic paradigms. Notable among these contributions are the following.

On the Power of Randomization in On-line Algorithms, work with Allan Borodin, Richard Karp, Gabor Tardos and Avi Wigderson published in Algorithmica in 1994, showed that the then-common belief that randomization may help the competitive ratio performance of online algorithms was essentially wrong.

Limitations of Learning via Embeddings in Euclidean Half-spaces, work with Nadav Eiron and Hans Ulrich Simon presented at COLT 2001, proved strong inherent shortcomings of the popular support vector machines paradigm.

A Sober Look at Clustering Stability, his award-winning COLT 2006 paper with Ulrike von Luxburg and Dávid Pál, indicated surprising fallacies in common usage of stability as a tool for choosing clustering parameters.

The fairness of machine learning–based decisions has been gaining growing attention in the past decade. One approach toward fairness has been the development of fair representations. In Inherent Limitations of Multi-Task Fair Representations, a paper with Tosca Lechner, he showed that the goal is not reachable. Namely, no data representation guarantees the fairness of classifiers based on it, while allowing accurate predictions over different tasks.

Service to the research community

Professor Ben-David has served as president of the Association for Computational Learning from 2009 to 2012 and has held editorial roles at leading journals. He was program committee chair for EuroCOLT in 1997, COLT in 1999 and ALT in 2004, and he continues to serve regularly as a senior area chair for COLT, ALT, NeurIPS, ICML and AISTATS.

Fellows of the Royal Society of Canada at the Cheriton School of Computer Science

Professor Ben-David is the 12th faculty member at the Cheriton School of Computer Science to be named a Fellow of the Royal Society of Canada. Previously elected Fellows are N. Asokan, Raouf Boutaba, Richard Cleve, Ihab Ilyas, J. Alan George, Srinivasan Keshav, Ming Li, Renée J. Miller, J. Ian Munro, M. Tamer Özsu and Douglas Stinson.

Royal Society of Canada

Founded in 1882, the RSC comprises the Academy of Arts and Humanities, Academy of Social Sciences, Academy of Science and the RSC College. The RSC recognizes excellence, advises the government and society, and promotes a culture of knowledge and innovation within Canada and with other academies around the world.